Speed Bump on the Way to Exascale Computing

The next stop on the road to exascale computing is systems that perform in the 10-petaFLOPS or greater range. But a planned system to test many of the needed technologies to reach that goal has been put on hold.

Peak processing power of the world’s top supercomputers has increased roughly along the lines of a traditional Moore’s Law growth curve. But that’s not to say a continuation of this growth is a given. One effort to test the technologies necessary to increase the performance of future systems has hit a snag.

Argonne National Laboratory will use IBM's next-generation Blue Gene/Q supercomputer, a system touting a peak performance of 10 petaFLOPS.

Earlier this month, IBM and the National Center for Supercomputing Applications (NCSA) put a halt to the Blue Waters project. The project’s goal was to deliver a supercomputer capable of sustained performance of 1 petaFLOPS on a range of real-world science and engineering applications.

Once completed, the Blue Waters system would have been one of the most powerful supercomputers in the world. Certainly, there are already about 10 current systems that are more powerful and in use today. But the Blue Waters system, like several others under development, would test technologies to push performance into the double-digit petaFLOPS range.

The joint statement from IBM and NCSA about the suspension of the work on Blue Waters touched on the nature of the problems encountered. “The innovative technology that IBM ultimately developed was more complex and required significantly increased financial and technical support by IBM beyond its original expectations,” the teams said in a release.

Other projects for systems in the 10- to 20-petaFLOPS range remain on track, with expected delivery dates as early as next year. But these and future systems face some significant challenges.

For example, systems developers find they must increase processing power while curbing energy consumption. Twenty-nine systems on the current Top 500 list of the world’s most powerful supercomputers use more than 1 megawatt (MW) of power. And the No. 1 system, the K Computer at the RIKEN Advanced Institute for Computational Science in Kobe, Japan, consumes 9.89 MW.

One approach has been to use clusters of processors, specifically CPUs based on multiprocessor architectures. Such processors can run at lower clock speeds and thus, consume less power. This move to multi-core CPUs is evident in the changes found in recent Top 500 lists. The most recent list, released in June 2011 by Top500.org, had 212 systems using processors with six or more cores. In contrast, only 95 systems in the previous list (released November 2010) used processors with six or more cores.

But using more nodes, more processors and more cores to increase performance introduces another potential problem. Special software is needed to ensure communications and data passing between cores and nodes does not consume a significant number of CPU cycles.

Another approach gaining favor is to augment CPU power with hardware-based accelerators, including application-specific integrated circuits (ASICs), field-programmable gate arrays (FPGAs) and graphics processing units (GPUs). In the current list, 19 systems use GPUs.

Beyond these issues, and as noted in an earlier Smarter Technology blog, a Defense Advanced Research Projects Agency Exascale Computing Study identified other major challenges. They include the following:

The memory and storage challenge: Today's memory and disk drive systems consume too much power. New technology will be required.

The concurrency and locality challenge: This too is related to the power issue. In the past, performance increases were achieved through a combination of faster processes and higher levels of parallelism. Increasing the clock rate increased power consumption. Moving forward will require massive parallelism and software that can efficiently harness the power of perhaps 100 million cores.

The resiliency challenge: An exascale system with hundreds of millions of cores must continue operating when a failure occurs. This will require an autonomic system that can constantly monitor its own status and respond to fast-changing conditions.

If these obstacles can be overcome, increasingly sophisticated applications will all benefit from the greater computational muscle of next-generation supercomputers.

Source : Smarter Technology

- 507 reads

Human Rights

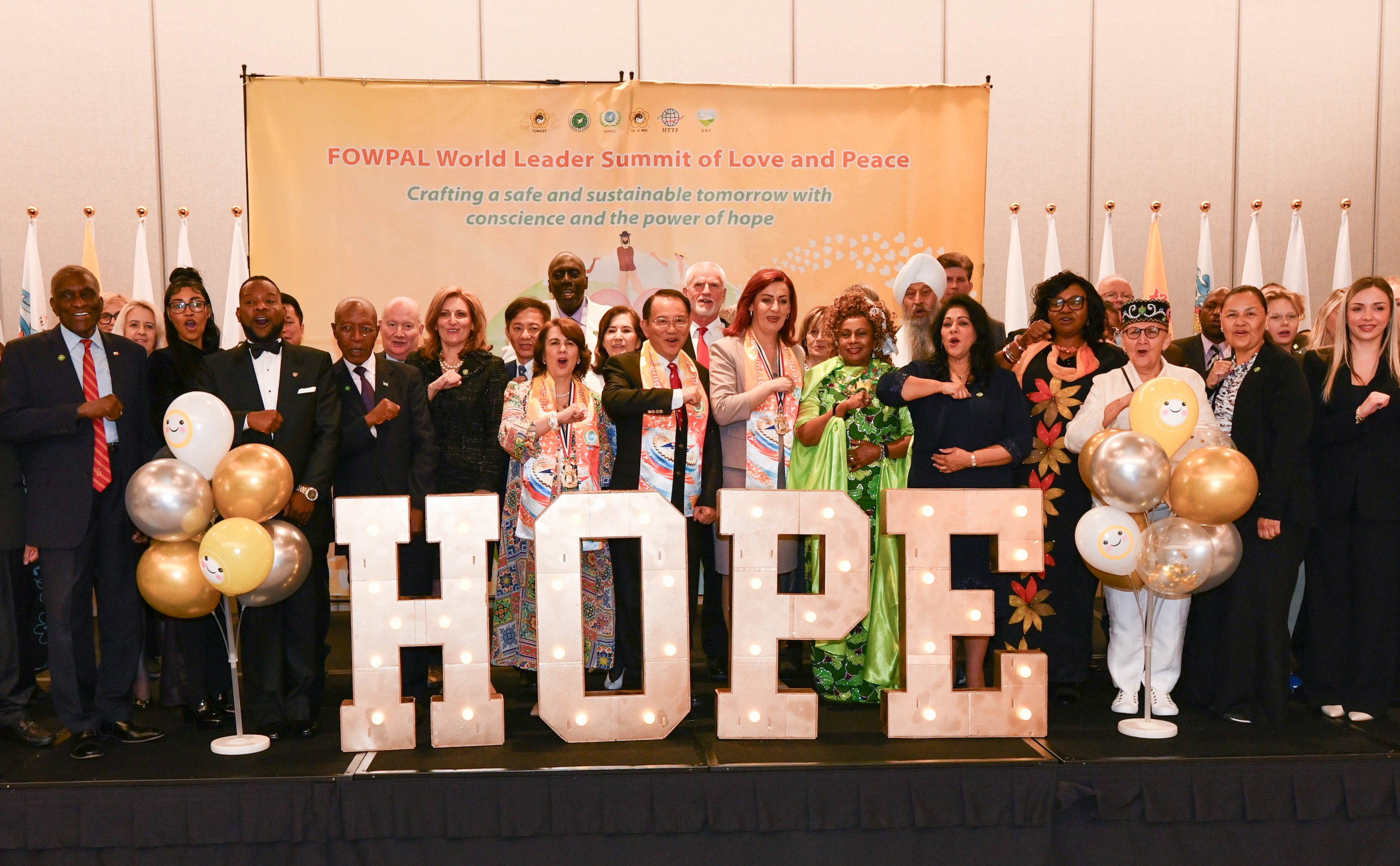

Fostering a More Humane World: The 28th Eurasian Economic Summi

Conscience, Hope, and Action: Keys to Global Peace and Sustainability

Ringing FOWPAL’s Peace Bell for the World:Nobel Peace Prize Laureates’ Visions and Actions

Protecting the World’s Cultural Diversity for a Sustainable Future

Puppet Show I International Friendship Day 2020