Russia Expands Facial Recognition Despite Privacy Concerns

Lack of Accountability, Oversight, Data Protection

Russian authorities are planning to expand the use of CCTV cameras with facial recognition software, despite weighty concerns about the lack of regulation, oversight, and data protection, Human Rights Watch said on October 02, 2020.

On September 25, 2020, Kommersant daily reported that CCTV cameras with facial recognition software, already used in Moscow, will be installed by the regional authorities in public spaces and at the entryway of apartment buildings in 10 pilot cities across Russia with the purported aim of protecting public safety. Moscow authorities are also planning to expand the use of this technology, installing CCTV cameras with facial recognition software in trams and on 25 percent of all underground trains.

“The authorities’ intention to expand the use of invasive technology across the country causes serious concern over the potential threat to privacy,” said Hugh Williamson, Europe and Central Asia director at Human Rights Watch. “Russia’s track record of rights violations means that the authorities should be prepared to answer tough questions to prove they are not are undermining people’s rights by pretending to protect public safety.”

The expansion of facial recognition technology in Russia has already given rise to criticism by privacy groups and digital rights lawyers and prompted some legal action.

Russian national security laws and surveillance practices enable law enforcement agencies to access practically any data in the name of protecting public safety. Kirill Koroteev, the head of the international justice program at Agora, a leading Russian network of human right lawyers, says that there is no judicial or public oversight over the surveillance methods in Russia, including facial recognition. Agora is attempting to challenge Moscow’s use of facial recognition technology at the European Court of Human Rights.

In the case of Roman Zakharov v. Russia, the European Court ruled that Russia’s legislation on surveillance “does not provide for adequate and effective guarantees against arbitrariness and the risk of abuse.”

A pattern of data breaches has been reported in connection with the integration of the facial recognition software into Moscow’s video surveillance. The reports highlight the lack of public scrutiny and accountability when it comes to the use of facial recognition technology by the Russian authorities for surveillance.

On September 16, Anna Kuznetsova of Roskomsvoboda, a prominent digital rights organization, filed a lawsuit against the Moscow Information Technology Department, alleging that she was easily able to gain access to the data gathered by 79 cameras in Moscow, including detailed information about her whereabouts over the course of one month, which turned out to be correct in 71 percent of the cases. Kuznetsova said that this data was made available for sale on the “dark web,” the part of the internet that enables users to be much more difficult to trace and that is used by some for illegal activities.

This is not the first case of personal data collected by the Moscow government’s facial recognition system being leaked online. Roskomsvoboda, which monitors the situation closely, has documented at least seven cases of Moscow residents whose personal data was made available for sale on the Internet this year. The group also uncovered additional data security concerns, such as the fact that law enforcement officers receive access to the facial recognition data collected by CCTV cameras via online links that can be easily shared.

Apparently in response to Kuznetsova’s case and the other incidents, the investigative authorities in Moscow opened a criminal case into the alleged sale of facial recognition data by two law enforcement officials. However, the authorities do not seem to recognize the need for developing appropriate limits on collecting, processing, and storing personal data, which would help prevent such security breaches, Human Rights Watch said.

Russia’s law on personal data envisages a number of safeguards to protect personal data. They include a ban on excessive collection and storage of data, basic standards for data quality and security, and a requirement to obtain consent from the data subject to collect biometric data.

The Moscow IT Department, which operates the city’s facial recognition systems, says that when the system matches facial images from CCTV footage to images from the police “wanted lists,” those matches are sent to law enforcement agencies for further investigation and identification. The IT Department claims that none of its officials access personal data during this process, since the department does not have any personal data of those on the police list or identify the people when they match. Hence, the department contends, the law’s provisions on biometric data should not apply to the data collected by Moscow’s facial recognition systems.

A case was filed in November 2019 challenging the alleged use of facial recognition technology by law enforcement officials in connection with a protester’s arrest. But Savyolovsky district court in Moscow rejected the suit, largely based on the Moscow IT Department’s argument and ruled that such data should not be considered personal data.

While the IT Department considers the personal data safeguards unapplicable to the images collected by the facial recognition systems, law enforcement procedures for processing data received from those systems is faulty and not open to public scrutiny, Human Rights Watch said. The criminal investigation into the alleged sale of data from Moscow CCTV by police officials highlights the implications of this system for the right to privacy.

The government should publish verifiable statistics on data breaches and the system’s performance and invite civil society experts and policymakers to engage in a debate regarding the necessity, proportionality, and legality of the use of facial recognition surveillance.

“Instead of expanding the use of facial recognition systems, Russian authorities should suspend the use of facial recognition technology nationwide,” Williamson said. “The authorities should conduct a thorough privacy and human rights impact assessment to determine whether the use of facial recognition can ever be rights compliant.”

Source:Human Rights Watch

- 391 reads

Human Rights

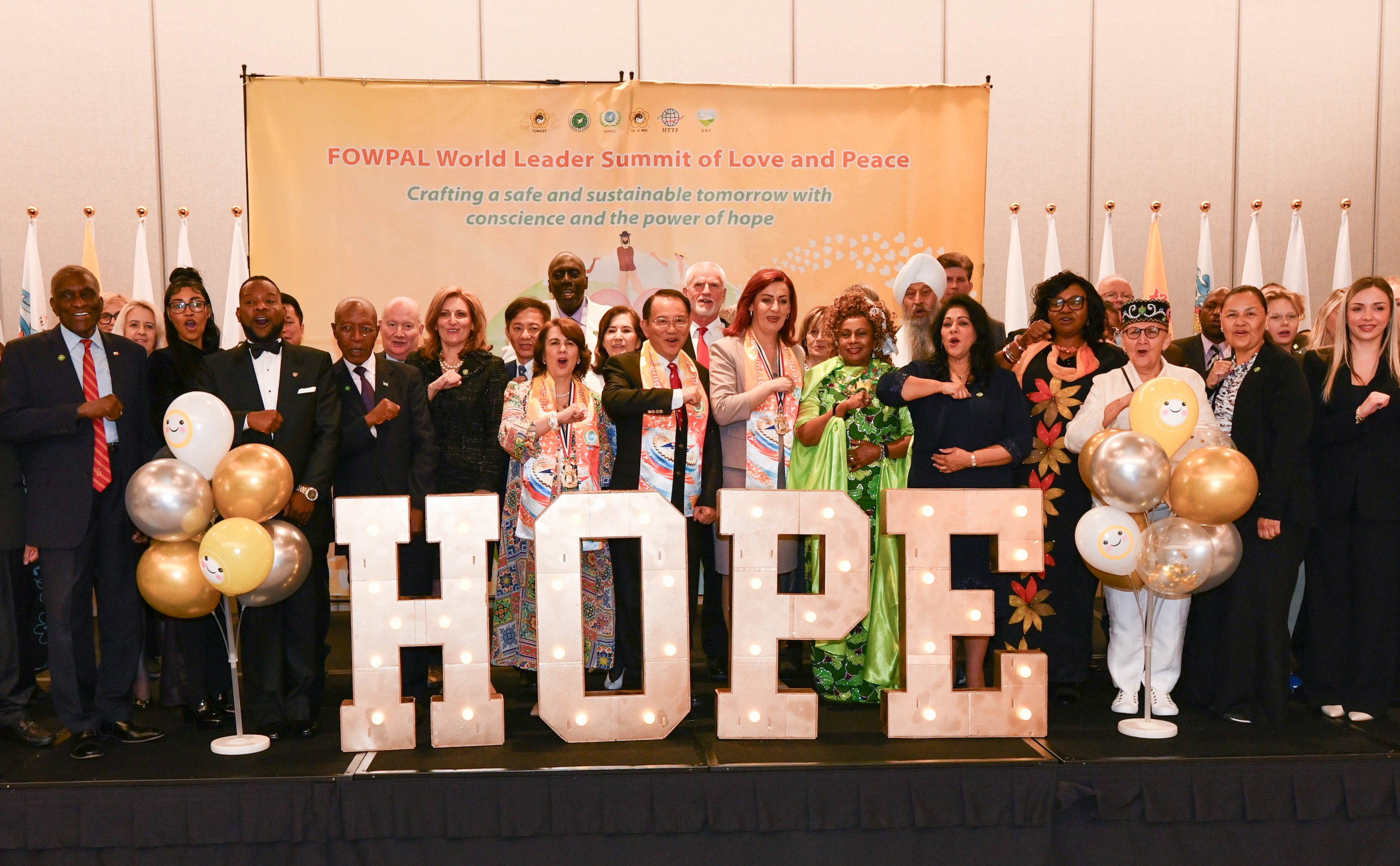

Fostering a More Humane World: The 28th Eurasian Economic Summi

Conscience, Hope, and Action: Keys to Global Peace and Sustainability

Ringing FOWPAL’s Peace Bell for the World:Nobel Peace Prize Laureates’ Visions and Actions

Protecting the World’s Cultural Diversity for a Sustainable Future

Puppet Show I International Friendship Day 2020