Hadoop Takes a Giant Step Toward the Enterprise

The formal debut of Hadoop 1.0 was six years in the making, but it's been well worth the wait given the enhanced reliability and security features that industry watchers believe will push the open-source computing platform into the enterprise and make it a mainstream system before long.

If you heard cheering as the new year kicked off, it wasn't all about the arrival of 2012. Some applause was from developers and data administrators welcoming the arrival of Hadoop 1.0, the open-source software framework from the Apache Software Foundation.

Why? Well, because taking advantage of big data, and managing big data, is a big deal these days. The ability to gain speed in data processing, data analytical efforts and data search is a big deal as well.

The opportunity to squeeze value from growing piles of data is fostering new revenue streams, helping companies gain efficiencies and providing a scalable, dependable distributed computing environment. And Hadoop provides all that.

The applause was loud for another reason as well. Hadoop v.1 had been long in the making—taking six years to achieve. Apache Hadoop Vice President Arun Murthy told eWEEK the wait was clearly worth it as the version may put Hadoop on the mainstream enterprise map.

"In addition to the major security improvements and support for HBase, the really big deal about version 1.0 is this is a release we feel that people can look at as very stable," said Murthy. "The developer community is really up for supporting version 1.0, and we expect 1.0 adoption to be much faster than for other versions."

Hadoop 1.0 also illustrates how a collaborative development production process should work. Hundreds of users, scientists and engineers were involved in its development. The result is a more secure, higher performing platform for the cloud computing environment and big data management and analysis.

As Murthy notes in a press statement, Hadoop is fast becoming the data platform of choice. It's not a stretch to guess that the current list of Hadoop adopters will be much more extensive by year's end. The list of early adopters includes both big tech titans and small niche technology players.

Here's a glimpse at what some notable and some not-so-notable companies are doing with Hadoop today:

● Adobe uses Hadoop for social services, structured data storage and internal data processing. Right now it's got 30 nodes running Hadoop on clusters and is looking to build an 80-node cluster.

● Brockmann Consult GmbH, which provides environmental informatics and geoinformation services, is parallel processing big sets of satellite data for its Hadoop-based Calvalus system.

● eBay is using Hadoop for search optimizing and research via a 532-node cluster.

● Eyealike, a visual media search technology, uses Hadoop for capabilities such as identifying facial similarity across large data sets and auto-tagging needs for social media.

● Facebook has two big Hadoop clusters for storing internal log and data sources and for data reporting, analytics and machine learning.

● Hulu uses Hadoop for log storage and analysis via a 13-machine cluster.

● Kalooga, which is a discovery service for image galleries, is using a 20-node cluster for Web crawling needs, analysis and events processing.

● Twitter uses Hadoop to store and process all it tweets and other data types generated on the social networking system.

● Yahoo has a Hadoop cluster of 4,500 nodes for research efforts around its ad systems and Web servers. It's also using it for scaling tests to drive Hadoop development on bigger clusters.

Such wide-range adoption across industries is impressive. Hadoop, as one industry leader described, is to data processing, management and storage what the Linux evolution was for advanced open-source operating systems.

Hadoop's potential is bright, and the next big release is predicted to come in the middle of this year. The cheers, I'm guessing, will be even more robust six months from now.

Source: Smarter Technology

- 557 reads

Human Rights

Fostering a More Humane World: The 28th Eurasian Economic Summi

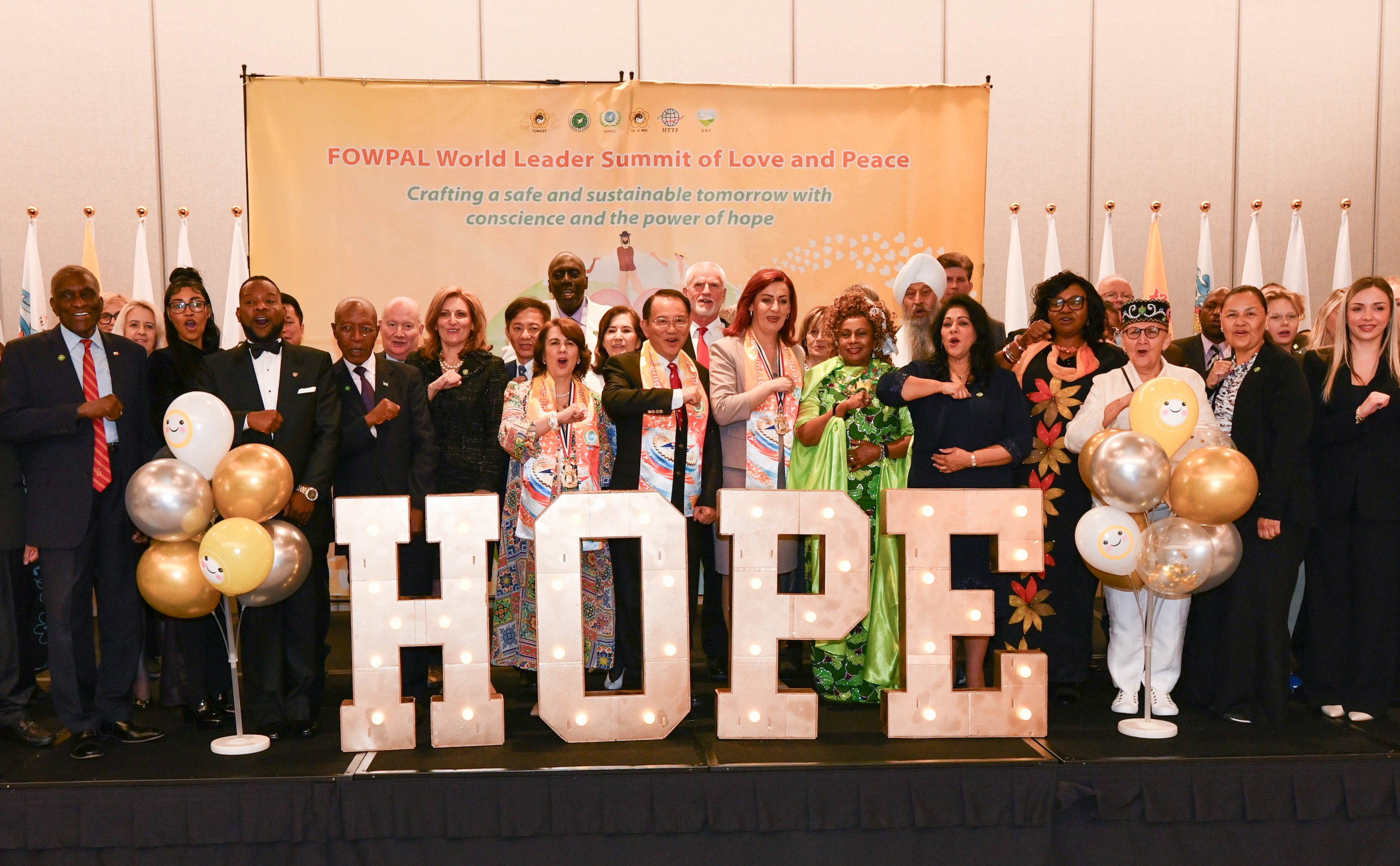

Conscience, Hope, and Action: Keys to Global Peace and Sustainability

Ringing FOWPAL’s Peace Bell for the World:Nobel Peace Prize Laureates’ Visions and Actions

Protecting the World’s Cultural Diversity for a Sustainable Future

Puppet Show I International Friendship Day 2020